Here is my next article on the heart of Hadoop known as MapReduce. Let's get into details.

Hadoop Components

Majorly, the Hadoop system consists of:

Storage -> Hadoop uses HDFS file system for storing files and directories. if you want to understand hdfs file system and how it stores files. Please refer to my previous article on HDFS.

Compute -> For compute, Hadoop Framework use MapReduce

Let's discuss MapReduce in detail and understand how MapReduce processes a huge amount of data in parallel.

What is MapReduce?

MapReduce aka MR is a distributed computing engine that processes a large amount of data in distributed computers parallelly. To be precise, it is a processing model/engine that processes huge volumes of hdfs files effectively

MapReduce Architecure

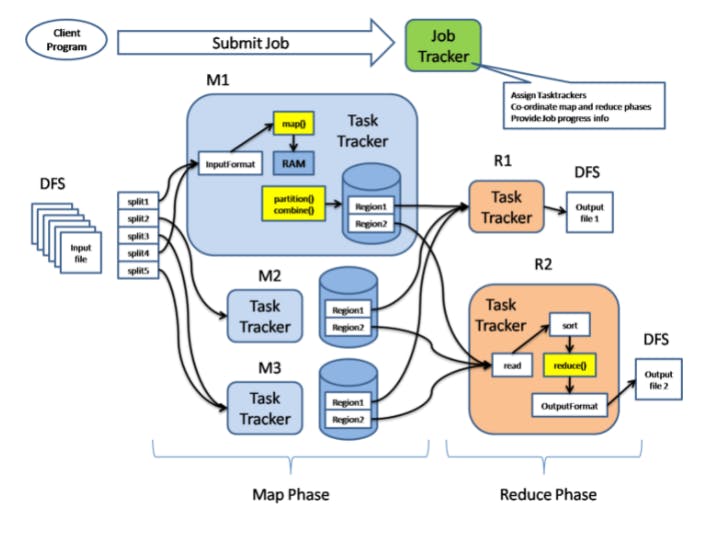

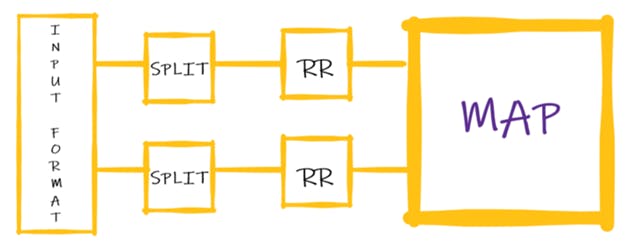

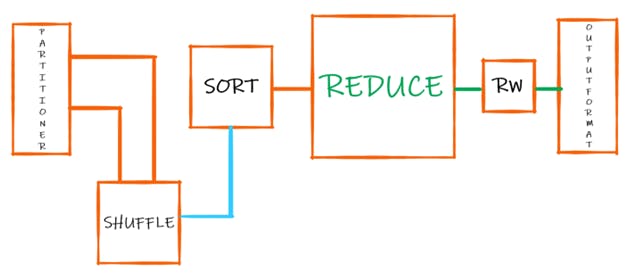

The below diagram shows the MapReduce architecture:

As MapReduce sounds, it has two major phases called Map and Reduce.

In Map Phase, we spilt the data into chunks and process it in parallel. Once the Map Task is completed and provided results which in turn input to reduce phase. reduce process the data and provide the output.

In Hadoop world, Both input and output to MapReduce are hdfs files as shown below.

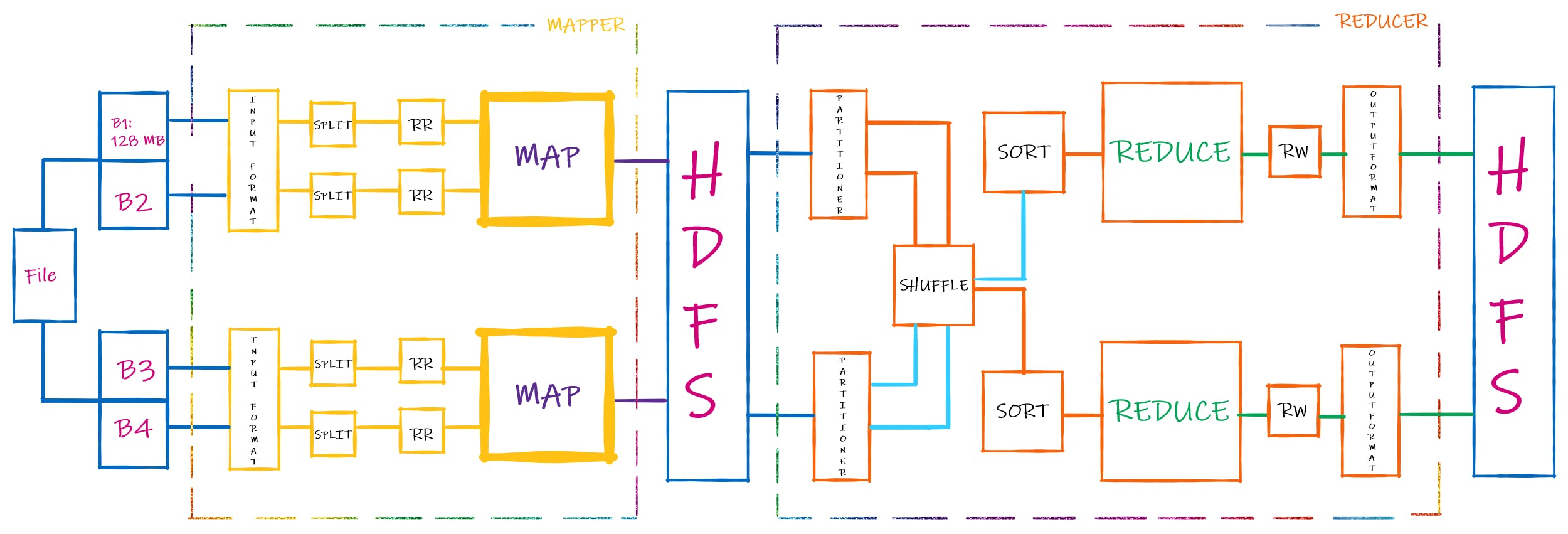

hdfs file is split into chunks referred to as blocks. our MapReduce will process those blocks and provide the results.

Map Reduce explained:

Mapper:

In Map reduce, everything is a key and value pair. Mapper is the functionality which takes key and value as input and generate intermediate key value pair

In Map reduce, everything is a key and value pair. Mapper is the functionality which takes key and value as input and generate intermediate key value pair

map(Key,value)->list(intermediate keys, intermediate values)

Input format

links the data blocks. it is kind of links all the blocks of the input files.

Input Split

is the logical block of the data. mostly it is same as physical block.

Confused? Suppose, if we have a file with 129M where our block size is 128M. The file splits into two blocks as B1 and B2 with 128M and 1M respectively.

Here physical block means actual block B1 and B2 with 128M and 1M. Logical block means, as the second block is too small and if we tie two blocks together file completes. MR Framework group B1 and B2 together logically and process as one.

Record Reader :

Record reader process the blocks and converts into key value pair. by default, byte offset of a line consider as Key where as content/line consider as value.

Map:

Now, we have Key, value pairs of data which we given to map functionality. whatever the logic implemented in map program. It process on input key and value pairs and generate intermediate key and value pairs in data nodes.

Mapper Phase complete...

Reducer:

Reduce is the function which takes intermediate key value pairs and generate final output as key,value pair

reduce( list_keys , list( intermediate_values)) -> out_key,out_value

Shuffle:

The data is passed through network of data nodes for grouping the same keys

Sort:

The data is sorted by the keys.

Reduce:

Reduce aggregate the sort and shuffled data based on the logic of reduce function.

Output Format:

Output format writes final key and value pairs with help of record writer into HDFS.